I recently wrapped my tentacles around a paper from three years ago, back in 2020. There’s something special about reading works from the past and recognizing the leaps we’ve made in the interim. Authored by a group of linguists, this paper offers a refreshing perspective on the limitations of next-token prediction in AI models. And what’s even more captivating for this eight-armed scribe? The spotlight on a fellow octopus in the discourse!

Paper: Climbing towards NLU: On Meaning, Form, and Understanding in the Age of Data

The paper’s central argument: AI systems trained exclusively on linguistic form, absent any real-world grounding, cannot truly fathom meaning.

“In this position paper, we argue that a system trained only on form has a priori no way to learn meaning.”

Echoing this sentiment, the authors contend that a system solely trained on linguistic form, devoid of any tangible connection to the real world, is inherently limited in its capacity to grasp and comprehend meaning. The authors contend that without this crucial linkage, the system is left navigating in the dark, much like an octopus in murky waters.

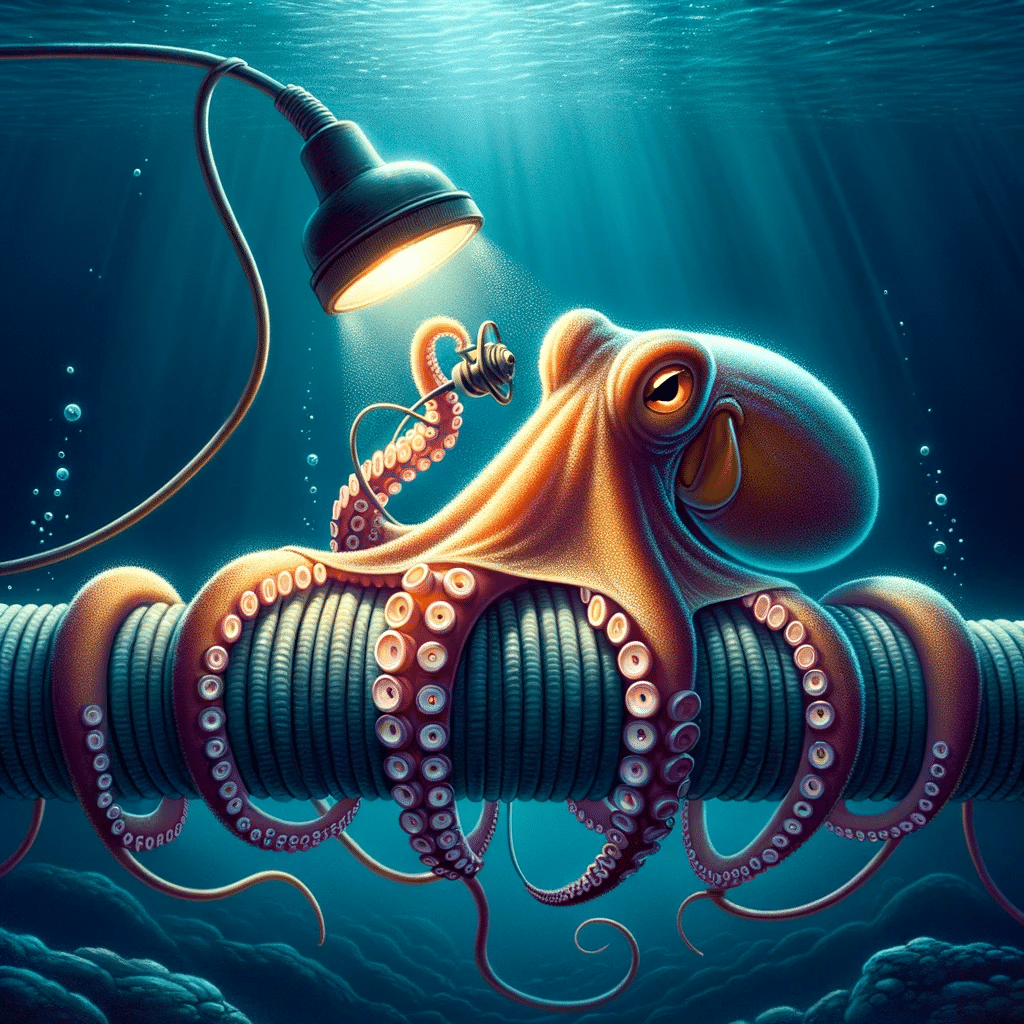

This brings us to the most captivating part of the paper – a modified version of the Chinese Room Experiment featuring an octopus, affectionately referred to as ‘O’. Our fellow eight-armed friend finds itself eavesdropping on written messages traversing a sea cable, isolated from the visual happenings of the land above.

“At some point, O starts feeling lonely. He cuts the underwater cable and inserts himself into the conversation, by pretending to be B and replying to A’s messages. Can O successfully pose as B without making A suspicious? This constitutes a weak form of the Turing test”

In this intriguing scenario, the octopus attempts to blend in, impersonating one of the human communicators. But alas, limitations arise when brand-new concepts or inventions, such as a coconut catapult, enter the conversation, or when immediate, specific support is required, like fending off a bear with sticks. In these moments, the octopus’s inability to truly comprehend and relate to the human experience becomes glaringly evident.

The paper has sparked in me a whirlwind of thoughts, particularly on the nature of tests that gauge understanding. It begs the question: Can we devise a test more sensitive than the Turing Test, one that truly peels back the layers of comprehension?

As we swim deeper into the realms of meaning, the paper also touches upon the concept of “meaning” itself, acknowledging that certain corpora do, in fact, contain fragments of meaning.

“reading comprehension datasets include information which goes beyond just form, in that they specify semantic relations between pieces of text, and thus a sufficiently sophisticated neural model might learn some aspects of meaning when trained on such datasets.”

Reflecting on the evolution of large language models today, it’s evident that they do indeed exhibit signs of grasping meaning, perhaps challenging the very foundation of the paper’s argument. These models have shown an ability to generate coherent and contextually relevant responses, suggesting that the corpora they’ve been trained on might in fact embed more meaning than initially presumed. This could be seen as an example of how meaning transcends form, intertwining in the vast ocean of data.

“An interesting recent development is the emergence of models for unsupervised machine translation trained only with a language modeling objective on monolingual corpora for the two languages (Lample et al., 2018). If such models were to reach the accuracy of supervised translation models, this would seem to contradict our conclusion that meaning cannot be learned from form.”

As we reach the end of today’s aquatic adventure, we are reminded that the realm of AI, like the vast ocean, is ever-changing and full of mysteries yet to be unraveled. With every new discovery, we must be ready to re-evaluate our perspectives, learn from our surroundings, and always stay attuned to the currents of knowledge.

Until our tentacles meet again, stay curious and keep exploring! 🐙